❗ This is not a tutorial ❗

Continuing from https://zalgorithm.com/loading-more-posts-from-an-archive-route/, the Remix app’s Archive page is loading posts from the WordPress database in batches of 15. If there are more posts to load, a “Next Posts” button is displayed at the bottom of the screen. Clicking that button takes you to /blog/archive/$cursor. $cursor is set to the value of the cursor property of the previous batch’s last post.

(Edit: the approach I eventually settled on is outlined in https://zalgorithm.com/pagination-take-five-success/.)

This works, but it creates ugly URLs: /blog/archive/YXJyYXljb25uZWN0aW9uOjk4. It’s also not a great user interface. It needs to supply more context and ways of skipping around and filtering batches of posts.

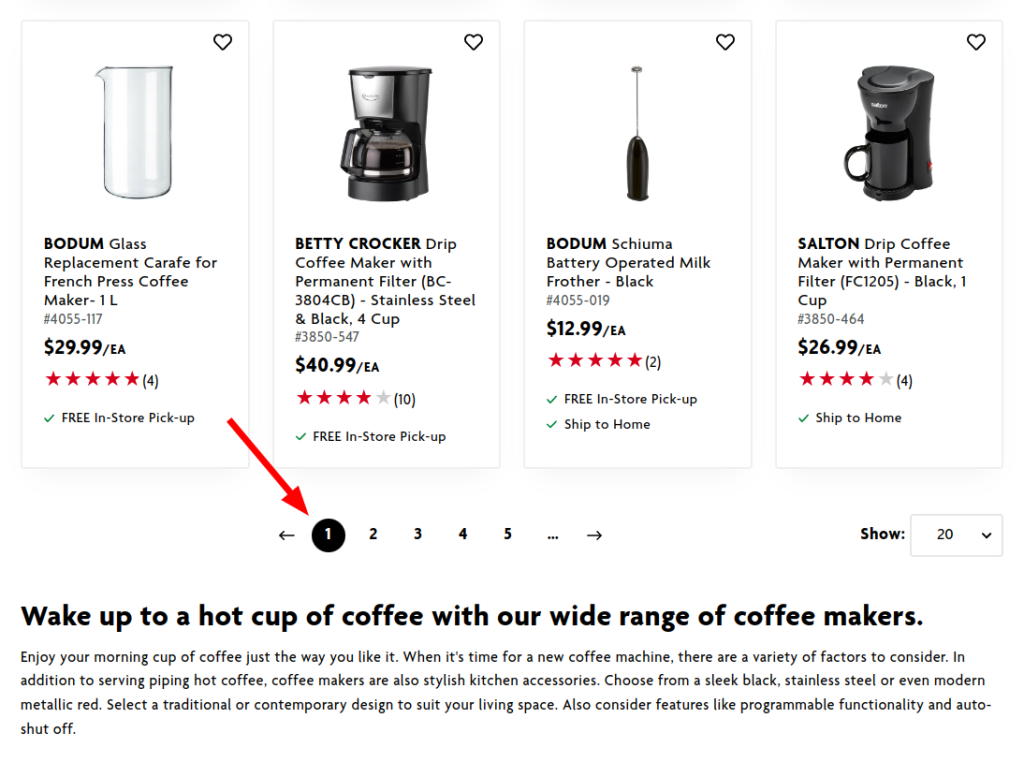

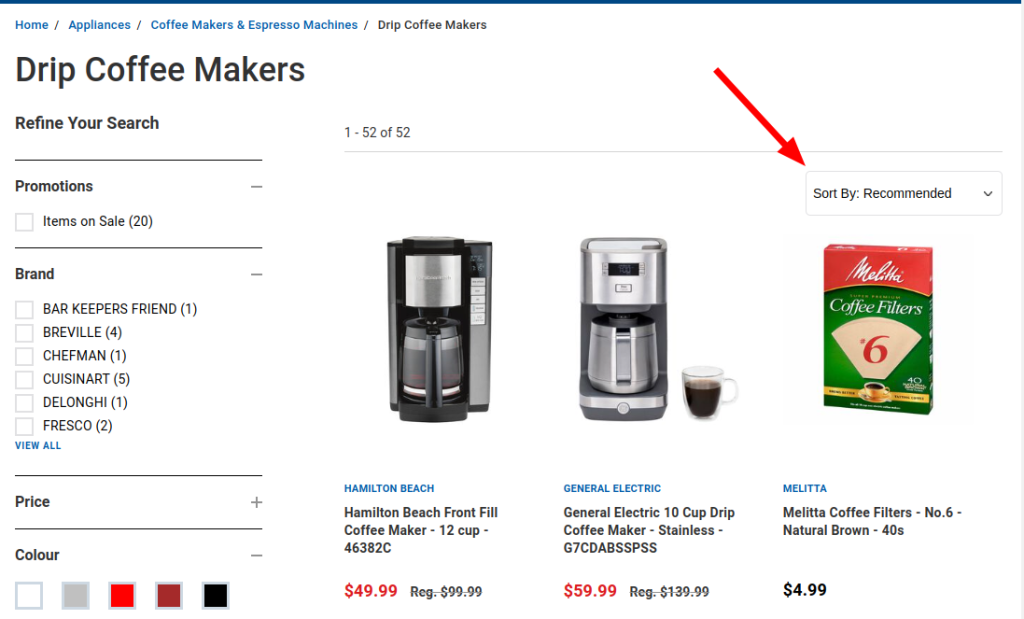

Since I’m currently looking for gaskets for a stove top espresso machine:

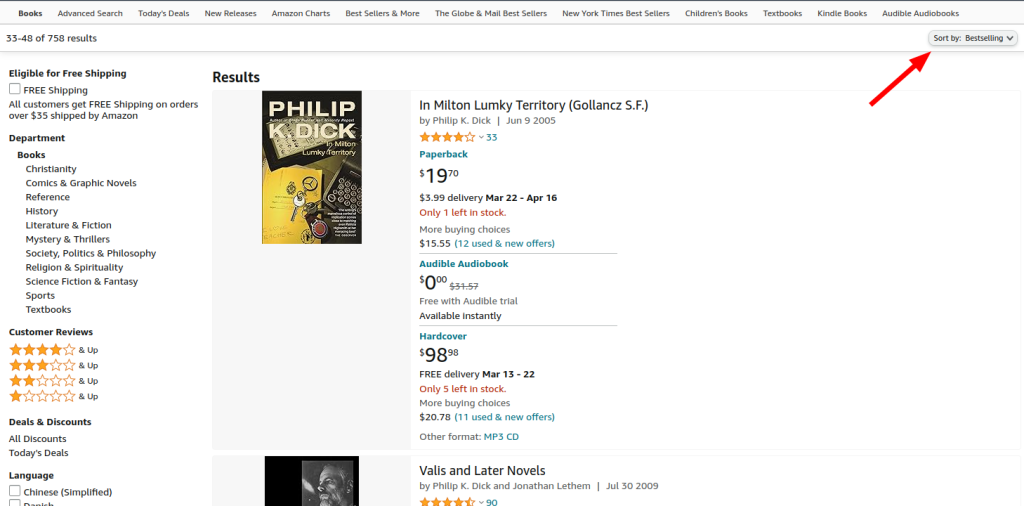

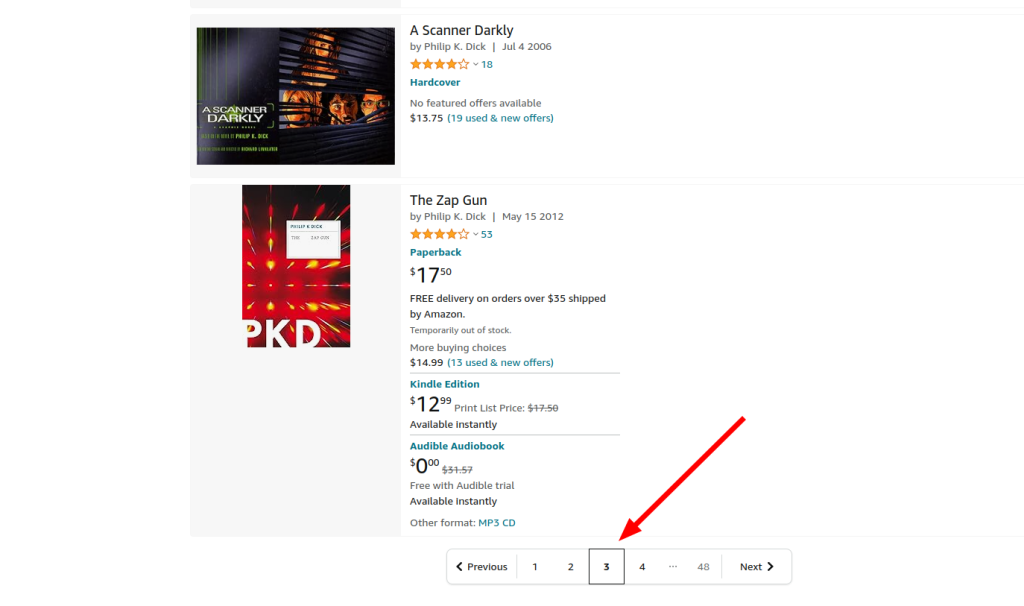

And since he’s been on my mind lately:

So the blog needs some kind of “sort” filter and a pagination bar.

Adding a pagination UI element

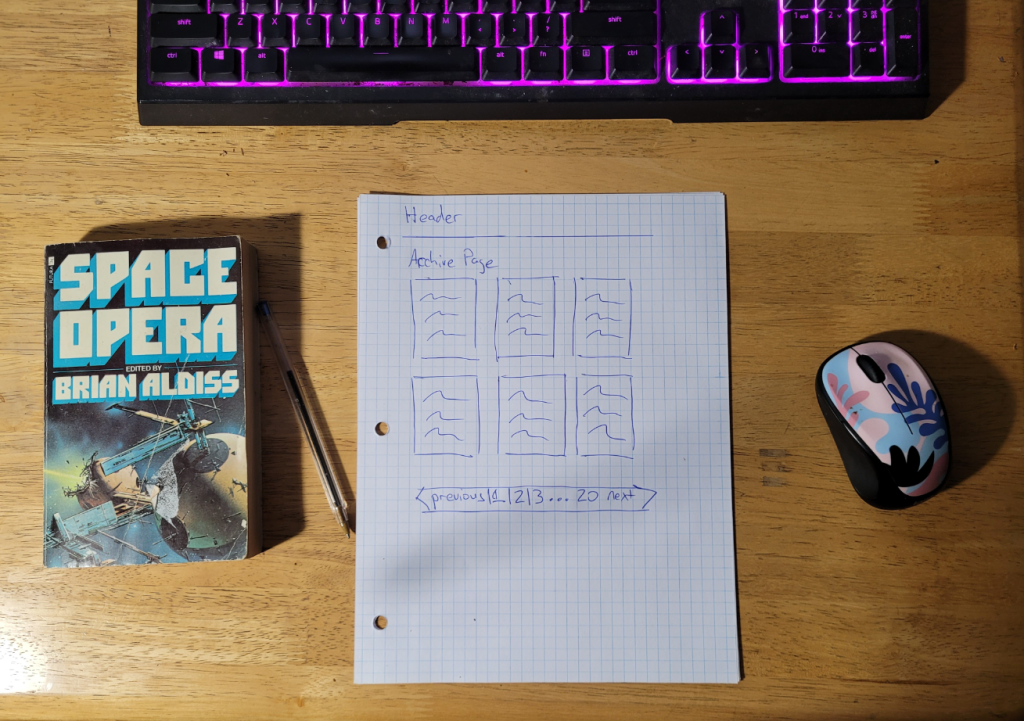

Here’s a mockup:

I’m going to try to implement this on a single route (app/routes/blog.archive.tsx.) The values required for pagination will be supplied by this GraphQL query:

{

posts (first: 100, after: "", where: {orderby: {field:DATE, order: DESC}} ) {

pageInfo {

hasNextPage

endCursor

}

edges {

cursor

}

}

}Note the first: 100 condition. By default, WPGraphQL sets a graphql_connection_max_query_amount limit of 100 posts: https://www.wpgraphql.com/filters/graphql_connection_max_query_amount. This can be overridden with a filter on WordPress. I can work with the limit of 100 posts for now. If there were more than 100 posts in the database, the hasNextPage property in the query’s result would be set to true. The value of the endCursor property could be used to get more data from WordPress.

I’m going to check out a new branch and fiddle around:

$ git checkout -b https://www.wpgraphql.com/filters/graphql_connection_max_query_amountI think all archive posts can be loaded in a single route. I’ll rename blog.archive_index.tsx to blog.archive.tsx:

$ mv app/routes/blog.archive._index.tsx app/routes/blog.archive.tsxCopy the cursor query from the GraphiQL IDE to app/models/wp_queries.ts:

// app/models/wp_queries.ts

...

export const ARCHIVE_CURSORS_QUERY = gql(`

query ArchiveCursors($after: String!) {

posts (first: 100, after: $after, where: {orderby: {field:DATE, order: DESC}} ) {

pageInfo {

hasNextPage

endCursor

}

edges {

cursor

}

}

}

`);

...For peace of mind, copy blog.archive.tsx to blog.archiveBak.tsx:

$ cp app/routes/blog.archive.tsx app/routes/blog.archiveBak.tsxI can delete most of the blog.archive.tsx file’s contents and have a copy of the original file open in my editor for reference. (I’m sure there’s a way of doing the same thing with git.)

The first step is to put together the data that’s needed for pagination:

// app/routes/blog.archive.tsx

import { json, LoaderFunctionArgs } from "@remix-run/node";

import { useLoaderData, useRouteError } from "@remix-run/react";

import { Maybe } from "graphql/jsutils/Maybe";

import { createApolloClient } from "lib/createApolloClient";

import {

ARCHIVE_CURSORS_QUERY,

} from "~/models/wp_queries";

import type { RootQueryToPostConnectionEdge } from "~/graphql/__generated__/graphql";

export const loader = async ({ request }: LoaderFunctionArgs) => {

const client = createApolloClient();

const cursorQueryResponse = await client.query({

query: ARCHIVE_CURSORS_QUERY,

variables: {

after: "",

},

});

if (cursorQueryResponse.errors || !cursorQueryResponse?.data?.posts?.edges) {

throw new Error("Unable to load post details.");

}

const chunkSize = Number(process.env?.ARCHIVE_CHUNK_SIZE) || 15;

const cursorEdges = cursorQueryResponse.data.posts.edges;

const pages = cursorEdges.reduce(

(

acc: { lastcursor: Maybe<string | null>; pageNumber: number }[],

edge: RootQueryToPostConnectionEdge,

index: number

) => {

if ((index + 1) % chunkSize === 0) {

acc.push({

lastcursor: edge.cursor,

pageNumber: acc.length + 1,

});

}

return acc;

},

[]

);

pages.unshift({

lastcursor: "",

pageNumber: 0,

});

console.log(`pages: ${JSON.stringify(pages, null, 2)}`);

return json({ pages: pages });

};

export default function Archive() {

const { pages } = useLoaderData<typeof loader>();

return (

<div className="px-6 mx-auto max-w-screen-lg">

<h2>Working on a new archive page for the blog</h2>

</div>

);

}

...A couple of things to note (for my own reference):

JSON.stringify(obj, null, 2)is great for logging the full values of objects to the console. Logging GraphQL responses this way also returns thetypesof each object 🙂- I’m setting

ARCHIVE_CHUNK_SIZE=5in my dev site’s.envfile so I can use smaller chunk sizes in development. The server has to be rebooted to pick up changes in the.envfile!

The call to console.log(pages: ${JSON.stringify(pages, null, 2)}); is returning the following in my dev environment:

pages: [

{

"lastcursor": "",

"pageNumber": 0

},

{

"lastcursor": "YXJyYXljb25uZWN0aW9uOjExOA==",

"pageNumber": 1

},

{

"lastcursor": "YXJyYXljb25uZWN0aW9uOjEwOA==",

"pageNumber": 2

},

{

"lastcursor": "YXJyYXljb25uZWN0aW9uOjk4",

"pageNumber": 3

},

{

"lastcursor": "YXJyYXljb25uZWN0aW9uOjg3",

"pageNumber": 4

},

{

"lastcursor": "YXJyYXljb25uZWN0aW9uOjc3",

"pageNumber": 5

},

{

"lastcursor": "YXJyYXljb25uZWN0aW9uOjY=",

"pageNumber": 6

}

]This gives enough data to start making the pagination element

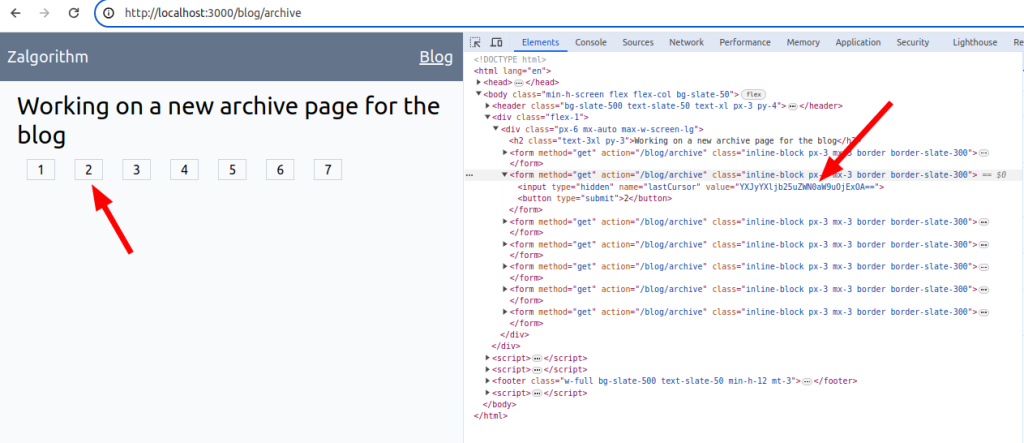

Submitting pagination requests with fetcher.Form

I’m guessing a bit here, but useFetcher is a hook for interacting with the server outside of navigation: https://remix.run/docs/en/main/hooks/use-fetcher. That’s what I’m trying to do.

// app/routes/blog.archive.tsx

...

import { useFetcher, useLoaderData, useRouteError } from "@remix-run/react";

...

export default function Archive() {

const { pages } = useLoaderData<typeof loader>();

const fetcher = useFetcher();

return (

<div className="px-6 mx-auto max-w-screen-lg">

<h2>Working on a new archive page for the blog</h2>

{pages.map((page: any) => (

<fetcher.Form

action="?"

method="get"

className="inline-block px-3 mx-3 border border-slate-300"

key={page.pageNumber}

>

<input type="hidden" name="lastCursor" value={page.lastCursor} />

<button type="submit">{page.pageNumber + 1}</button>

</fetcher.Form>

))}

</div>

);

}

I’m not sure. I’ll see if I can get it to do something.

This works:

// app/routes/blog.archive.tsx

import { json, LoaderFunctionArgs } from "@remix-run/node";

import { useFetcher, useLoaderData, useRouteError } from "@remix-run/react";

import { Maybe } from "graphql/jsutils/Maybe";

import { createApolloClient } from "lib/createApolloClient";

import {

ARCHIVE_CURSORS_QUERY,

ARCHIVE_POSTS_QUERY,

} from "~/models/wp_queries";

import type {

PostConnectionEdge,

RootQueryToPostConnectionEdge,

} from "~/graphql/__generated__/graphql";

import PostExcerptCard from "~/components/PostExcerptCard";

interface Page {

pageNumber: number;

lastCursor: string;

}

interface FetcherTypeData {

postEdges: PostConnectionEdge;

pageNumber: number;

}

export const loader = async ({ request }: { request: Request }) => {

const url = new URL(request.url);

const after = url.searchParams.get("lastCursor") || "";

const pageNumber = url.searchParams.get("pageNumber");

let pages;

const client = createApolloClient();

if (!after && !pageNumber) {

const cursorQueryResponse = await client.query({

query: ARCHIVE_CURSORS_QUERY,

variables: {

after: "",

},

});

if (

cursorQueryResponse.errors ||

!cursorQueryResponse?.data?.posts?.edges

) {

throw new Error("Unable to load post details.");

}

const chunkSize = Number(process.env?.ARCHIVE_CHUNK_SIZE) || 15;

const cursorEdges = cursorQueryResponse.data.posts.edges;

pages = cursorEdges.reduce(

(

acc: { lastCursor: Maybe<string | null>; pageNumber: number }[],

edge: RootQueryToPostConnectionEdge,

index: number

) => {

if ((index + 1) % chunkSize === 0) {

acc.push({

lastCursor: edge.cursor,

pageNumber: acc.length + 1,

});

}

return acc;

},

[]

);

// handle the case of the chunk size being a multiple of the total number of posts.

if (cursorEdges.length % chunkSize === 0) {

pages.pop();

}

pages.unshift({

lastCursor: "",

pageNumber: 0,

});

} else {

pages = [];

}

const postsQueryResponse = await client.query({

query: ARCHIVE_POSTS_QUERY,

variables: {

after: after,

},

});

if (postsQueryResponse.errors || !postsQueryResponse?.data?.posts?.edges) {

throw new Error("An error was returned loading the posts");

}

const postEdges = postsQueryResponse.data.posts.edges;

return json({ pages: pages, postEdges: postEdges, pageNumber: pageNumber });

};

export default function Archive() {

const initialData = useLoaderData<typeof loader>();

let postEdges = initialData.postEdges;

const fetcher = useFetcher();

const fetcherData = fetcher.data as FetcherTypeData;

let currentPageNumber: number;

if (fetcherData && fetcherData?.postEdges) {

postEdges = fetcherData.postEdges;

currentPageNumber = Number(fetcherData?.pageNumber);

}

return (

<div className="px-6 mx-auto max-w-screen-lg">

<h2 className="text-3xl py-3">

Working on a new archive page for the blog

</h2>

<div className="grid grid-cols-1 md:grid-cols-2 xl:grid-cols-3 gap-6">

{postEdges.map((edge: PostConnectionEdge) => (

<PostExcerptCard

title={edge.node?.title}

date={edge.node?.date}

featuredImage={edge.node?.featuredImage?.node?.sourceUrl}

excerpt={edge.node?.excerpt}

authorName={edge.node?.author?.node?.name}

slug={edge.node?.slug}

key={edge.node.id}

/>

))}

</div>

{initialData.pages.map((page: Page) => (

<fetcher.Form

action="?"

method="get"

className={`inline-block px-3 mx-3 border border-slate-300 ${

currentPageNumber === page.pageNumber ? "bg-red-200" : "bg-blue-200"

}`}

key={page.pageNumber}

>

<input type="hidden" name="lastCursor" value={page.lastCursor} />

<input type="hidden" name="pageNumber" value={page.pageNumber} />

<button type="submit">{page.pageNumber + 1}</button>

</fetcher.Form>

))}

</div>

);

}

export function ErrorBoundary() {

const error = useRouteError();

const errorMessage = error instanceof Error ? error.message : "Unknown error";

return (

<div className="mx-auto max-w-3xl px-5 py-4 my-10 bg-red-200 border-2 border-red-700 rounded break-all">

<h1>App Error</h1>

<pre>{errorMessage}</pre>

</div>

);

}Maybe that’s a weird way of dealing with pagination. If I go with it I’ll look into using fetcher.state === 'submitting' to add a "pending" class to the selected pagination element.

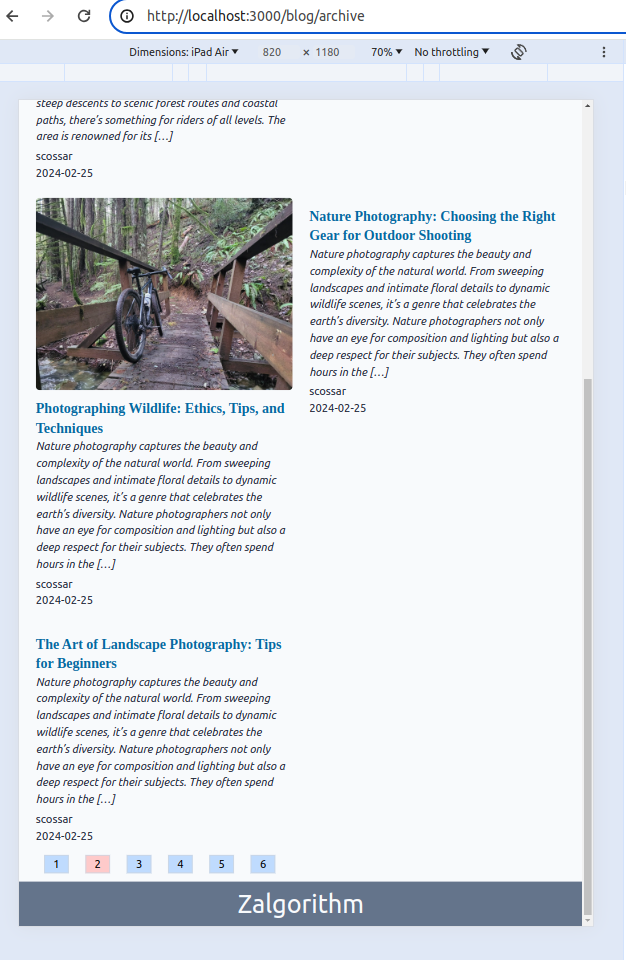

Styling issues aside, it’s working well:

I’m going to hold off on pushing this to the live site. Tomorrow I’ll try creating pagination with Link components instead of using form.Fetcher.